Speculative execution—the trick CPUs use to guess future instructions and keep pipelines full—is also an underused superpower for web automation and AI browser agents. Instead of plodding through a single plan, an agent can branch on ambiguous decisions, pursue multiple options in parallel, prefetch the heavy resources, and then commit the best path while rolling back the losers. Done right, this can cut task latency dramatically, improve robustness under uncertainty, and keep per-task costs predictable.

In this article, I lay out a practical, opinionated design for speculative execution in browser agents using the Chrome DevTools Protocol (CDP): how to fork action branches in parallel tabs, share cache, capture deterministic snapshots, gate writes to avoid unintended side effects, commit a winning branch, and cleanly rollback. I also cover resource-aware branch prediction and cost control, and propose an evaluation plan suitable for technical teams.

Why speculation for browser agents now

- Latency is king for interactive tasks: Many web tasks are IO-bound (network and rendering), not CPU-bound. Parallelizing alternative paths can improve time-to-first-useful-outcome by 2–4× even if you discard some work.

- LLM uncertainty compounds: When a model is 60–80% confident about multiple UI actions, exploring the top-K plausible plans concurrently can raise the chance at least one plan succeeds on the first try.

- Commodity parallelism is cheap: A single headless Chrome can service multiple tabs with shared cache, allowing a degree of speculative concurrency with modest extra cost.

- CDP matured: CDP’s Target, Network, DOMSnapshot, IndexedDB, Storage, Fetch, and Emulation domains give enough levers to implement isolation, interception, and snapshotting for reproducible exploration.

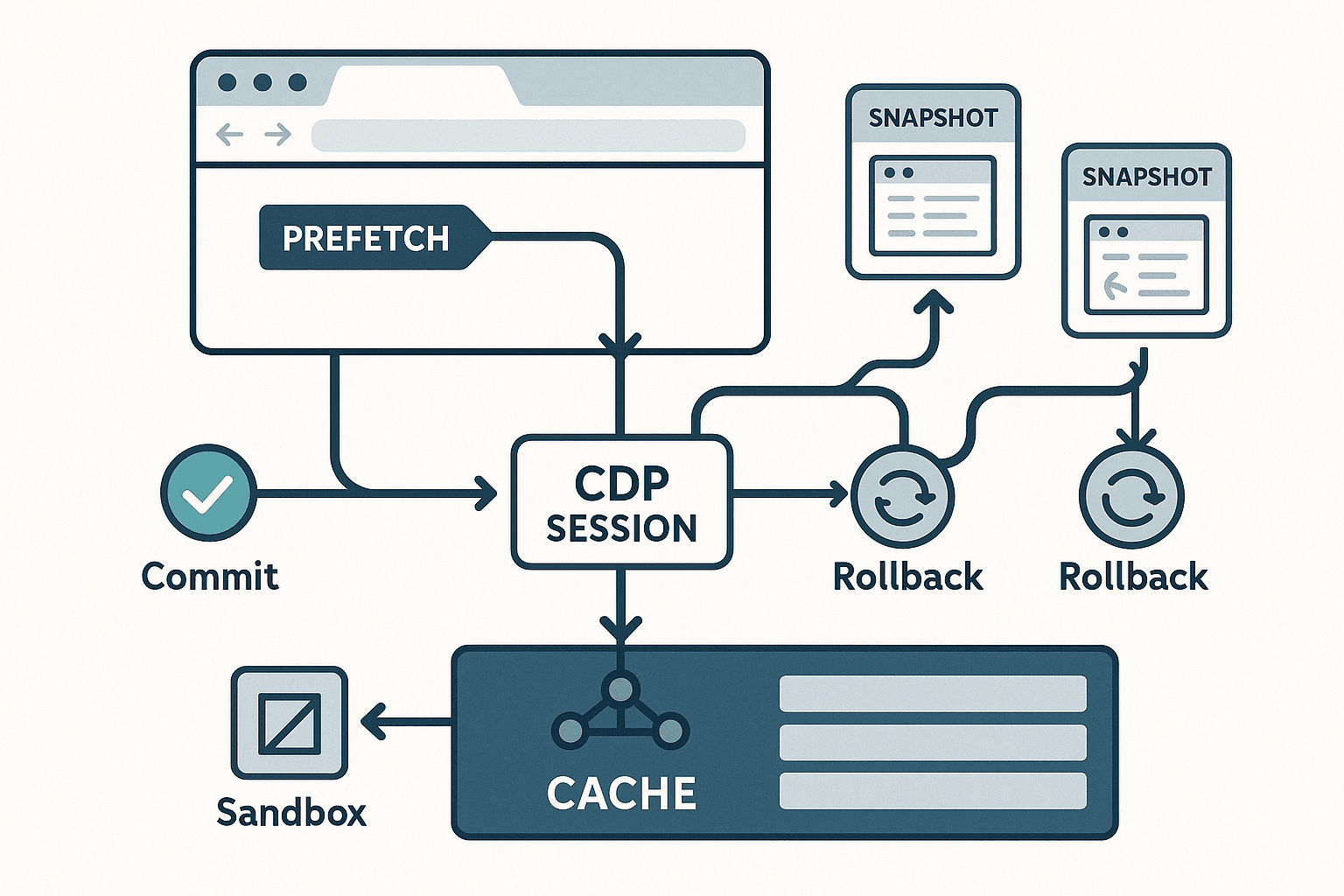

A high-level architecture

- Orchestrator: A controller that maintains a search tree of plans. It selects branches to expand, terminates low-value branches, and chooses a winner to commit.

- Browser runtime: A headless Chrome/Chromium with CDP access. Use a single profile (user data dir) to share HTTP cache across tabs unless isolation requires otherwise.

- Branch manager: Spawns one Page (tab) per branch within the same BrowserContext to share cache, or separate contexts for strong isolation. Attaches a CDP session to each Page.

- Write gate: A request interceptor that blocks or virtualizes non-idempotent operations (POST/PUT/PATCH/DELETE) during speculation. Only the commit phase permits writes to the real target.

- Snapshot/rollback subsystem: Periodically captures state required to deterministically replay or rollback: cookies, storage, network configuration, DOM snapshot, action log, and deterministic seeds.

- Prefetcher: Aggressively fetches resources likely needed by any branch (e.g., result pages, assets) and populates cache. Branches then load instantly from cache.

- Branch predictor: Allocates limited concurrency to branches based on learned utility estimates and cost (multi-armed bandit or heuristic scoring).

- Telemetry and cost control: Observes CPU, memory, network, and time/tokens spent; applies back-pressure to keep within budget.

CDP building blocks you actually need

- Target domain: Create and attach to tabs and contexts.

- Target.createTarget, Target.attachToTarget, Target.setAutoAttach

- Target.createBrowserContext for isolation when needed

- Network domain: Control caching, emulate network, monitor requests.

- Network.enable, Network.setCacheDisabled(false), Network.emulateNetworkConditions

- Network.getCookies/Network.getAllCookies

- Fetch domain: Intercept requests and optionally synthesize responses.

- Fetch.enable, Fetch.requestPaused, Fetch.continueRequest, Fetch.failRequest, Fetch.fulfillRequest

- Page and DOMSnapshot domains: Navigate and capture reproducible snapshots.

- Page.navigate, Page.captureSnapshot (MHTML), DOMSnapshot.captureSnapshot

- Storage and IndexedDB domains: Enumerate and copy local storage state.

- Storage.getUsageAndQuota, IndexedDB.requestDatabaseNames, IndexedDB.requestData

- Emulation domain: Pin down sources of non-determinism.

- Emulation.setTimezoneOverride, Emulation.setLocaleOverride, Emulation.setUserAgentOverride, Emulation.setFocusEmulationEnabled

- Runtime domain: Evaluate scripts to instrument pages.

- Runtime.evaluate for seeding Math.random/Date and extracting storage

Parallel branches with multi-session CDP

You can fork branches as separate tabs in a single BrowserContext to share HTTP cache. The orchestration loop is straightforward:

- Define decision points where alternatives multiply (e.g., choose a search result, click a tab, fill a different form field).

- Create one Page per alternative with a copy of the current action log and deterministic seeds.

- Let each Page continue in parallel for a fixed budget (time/steps) and report a utility estimate (e.g., goal proximity, confidence, or external reward function).

- Cull branches with poor utility; keep the best few alive.

The most reliable path to shared cache is to run all branches in the same Chrome profile and BrowserContext. Chrome’s HTTP cache is per profile; incognito contexts have separate ephemeral caches. So:

- Use a single userDataDir for the headless session.

- Prefer a single BrowserContext for branches if you want shared cache.

- If you need storage/cookie isolation between branches, use multiple BrowserContexts but understand you then lose HTTP cache sharing between those contexts.

Prefetch and shared cache

Prefetch is where the wins come from. If you know a set of likely next URLs, you can prefetch them in a background tab or via injected link rel=prefetch/prerender tags, warming HTTP cache for all branches.

Practical tactics:

- Predict next resources: Early in the page, parse and score candidate links. For search results, prefetch the first N result pages and key assets.

- Use GET-only, obey robots and origin rules: Restrict prefetch to GET and same-origin where possible; do not prefetch endpoints that may have side effects even via GET.

- Let Chrome cache do the heavy lifting: Once prefetched in the shared BrowserContext, other tabs should see cache hits.

- Coordinate via Fetch/Network events: Observe requests across pages; when one branch fetches large assets, others benefit automatically without extra code.

Minimal Node.js example using Puppeteer (CDP under the hood) showing multi-page branching and request gating:

javascriptconst puppeteer = require('puppeteer'); async function withWriteGate(page, { speculative }) { await page.setRequestInterception(true); page.on('request', async (req) => { const method = req.method(); const url = req.url(); // Block non-idempotent methods during speculation if (speculative && ['POST', 'PUT', 'PATCH', 'DELETE'].includes(method)) { console.warn(`Write-gate: blocking ${method} ${url}`); return req.abort('blockedbyclient'); } // Strip analytics during speculation to reduce noise/cost if (speculative && /google-analytics|segment|mixpanel|sentry/.test(url)) { return req.abort('blockedbyclient'); } return req.continue(); }); } (async () => { const browser = await puppeteer.launch({ headless: true, userDataDir: './profile-shared-cache', // share HTTP cache args: [ '--disable-features=IsolateOrigins,site-per-process', '--disable-renderer-backgrounding', ], }); const context = browser.defaultBrowserContext(); // Seed branch pages from a canonical URL const seedUrl = 'https://example.com/search?q=speculative'; const branches = await Promise.all([ context.newPage(), context.newPage(), context.newPage(), ]); for (const [i, page] of branches.entries()) { await withWriteGate(page, { speculative: true }); await page.setUserAgent(`BranchAgent/1.0 (branch=${i})`); await page.goto(seedUrl, { waitUntil: 'networkidle2' }); } // Prefetch likely next pages in one branch to warm cache for all await branches[0].evaluate(() => { const links = [...document.querySelectorAll('a[href]')] .map(a => a.href) .slice(0, 10); links.forEach(href => { const link = document.createElement('link'); link.rel = 'prefetch'; link.href = href; document.head.appendChild(link); }); }); // Each branch click a different candidate link const candidates = await branches[0].evaluate(() => [...document.querySelectorAll('a[href]')].slice(0, 3).map(a => a.href) ); await Promise.all(branches.map((page, i) => page.goto(candidates[i], { waitUntil: 'domcontentloaded' }))); // Score branches by some heuristic (title match, presence of target selector, etc.) async function score(page) { return page.evaluate(() => { const good = document.querySelector('h1, h2'); return good ? good.textContent.length : 0; }); } const scores = await Promise.all(branches.map(score)); const bestIdx = scores.indexOf(Math.max(...scores)); console.log('Branch scores:', scores, 'best:', bestIdx); // Commit: disable write-gate for the winner only // In Puppeteer, easiest is to open a fresh page and replay winning actions. // For demo, we just continue on the winning page. // Close losers await Promise.all(branches.map((p, i) => (i === bestIdx ? Promise.resolve() : p.close()))); // Proceed with winning branch (enable writes if needed in your controller) await browser.close(); })();

This is intentionally minimal; a production system would maintain an explicit action log and re-instantiate a clean “commit page” to replay the winning plan without any speculative instrumentation.

Deterministic snapshots and rollback

A full browser VM snapshot is not generally exposed via CDP, so we approximate determinism by controlling sources of randomness and recording enough state to reconstruct a page at well-defined boundaries.

Targets for determinism:

- Randomness: Seed Math.random and Date.now; control crypto.getRandomValues for non-security-critical flows (do not override on pages where security matters). Set a fixed timezone and locale.

- Network: Freeze network conditions; avoid request timing races by using networkidle states. For APIs that return random content, spec branches should not rely on brittle selectors.

- Storage and cookies: Capture per-origin cookies, localStorage, sessionStorage, and (when necessary) IndexedDB slices.

- DOM and inputs: Capture the DOM tree, including form control values, and a compact trace of user actions.

Useful CDP calls:

- DOMSnapshot.captureSnapshot for the layout and DOM state in a structured format, including rare nodes and attributes.

- Page.captureSnapshot to MHTML if you need offline restore for visual comparison and content hashing.

- Network.getCookies and Network.getAllCookies for session cookies.

- Runtime.evaluate to dump localStorage/sessionStorage to JSON; IndexedDB domain to enumerate and copy records (be selective for performance).

- Emulation.setTimezoneOverride and Emulation.setLocaleOverride to remove locale variance.

A pragmatic rollback strategy:

- Keep a canonical tab parked at the last safe boundary (e.g., post-login homepage). Do not mutate it.

- Fork speculative branches from that boundary; they carry their own local divergent state.

- When a winner is chosen, re-initialize a clean commit tab from the canonical boundary by:

- Restoring cookies and basic storage (if needed for session continuity).

- Replaying the winning action log deterministically.

- Allowing writes only in the commit tab.

- Close all speculative tabs, which implicitly discards their local state.

This strategy avoids the hardest problem (exact JS heap snapshot/restore) and still gains most of the benefit, because web UI tasks are naturally structured around navigation boundaries where replay is feasible and stable.

Seeding randomness and time via Runtime.evaluate:

javascriptasync function enforceDeterminism(page, { seed = 42, timezone = 'UTC', locale = 'en-US' } = {}) { const client = await page.target().createCDPSession(); await client.send('Emulation.setTimezoneOverride', { timezoneId: timezone }); await client.send('Emulation.setLocaleOverride', { locale }); // Seed Math.random and Date.now; pin performance.now offset await page.evaluateOnNewDocument((seed) => { (function() { let s = seed >>> 0; function xorshift() { s ^= s << 13; s ^= s >>> 17; s ^= s << 5; return ((s >>> 0) / 0xFFFFFFFF); } const start = Date.now(); const base = performance.timeOrigin || start; Math.random = xorshift; const origNow = Date.now; Date.now = () => start + Math.floor((origNow() - start) / 100) * 100; // coarsen // WARNING: Overriding crypto is unsafe for sensitive pages; feature-flag if needed if (window.crypto && window.crypto.getRandomValues) { const orig = window.crypto.getRandomValues.bind(window.crypto); window.crypto.getRandomValues = (arr) => { for (let i = 0; i < arr.length; i++) arr[i] = Math.floor(xorshift() * 256); return arr; }; } })(); }, seed); }

Capturing storage and cookies (selectively):

javascriptasync function snapshotState(page) { const client = await page.target().createCDPSession(); await client.send('Network.enable'); const cookies = (await client.send('Network.getAllCookies')).cookies; const local = await page.evaluate(() => { const ls = {}; const ss = {}; for (let i = 0; i < localStorage.length; i++) { const k = localStorage.key(i); ls[k] = localStorage.getItem(k); } for (let i = 0; i < sessionStorage.length; i++) { const k = sessionStorage.key(i); ss[k] = sessionStorage.getItem(k); } return { ls, ss }; }); return { cookies, localStorage: local.ls, sessionStorage: local.ss }; }

Restoring to a fresh tab before commit:

javascriptasync function restoreState(page, snapshot) { const client = await page.target().createCDPSession(); await client.send('Network.enable'); // Clear existing cookies for safety const existing = (await client.send('Network.getAllCookies')).cookies; for (const c of existing) { await client.send('Network.deleteCookies', { name: c.name, domain: c.domain, path: c.path }); } for (const c of snapshot.cookies) { await client.send('Network.setCookie', { name: c.name, value: c.value, domain: c.domain, path: c.path, expires: c.expires, httpOnly: c.httpOnly, secure: c.secure, sameSite: c.sameSite }); } await page.evaluate((state) => { localStorage.clear(); sessionStorage.clear(); Object.entries(state.localStorage).forEach(([k, v]) => localStorage.setItem(k, v)); Object.entries(state.sessionStorage).forEach(([k, v]) => sessionStorage.setItem(k, v)); }, { localStorage: snapshot.localStorage, sessionStorage: snapshot.sessionStorage }); }

Write-gating and side-effect management

The most delicate part of speculation is preventing unintended writes. Not all GETs are safe, and many flows require POST to advance. There is no universal solution, but you can layer strategies:

- Strict (safe-by-default): Block all non-idempotent methods in speculative branches. Works for browse-only tasks; will under-explore when POST is necessary.

- Shadow environment: For sites you control, mirror production to a staging domain; rewrite requests during speculation (via Fetch) to the shadow domain. You get realistic responses without production writes.

- Shadow identity: Use separate user accounts per branch. Even if a POST occurs, each branch mutates a sandbox account. The commit phase replays writes from a clean commit tab using the real account.

- Response simulation: Intercept POSTs and synthesize plausible responses in the branch without contacting the server. This requires site-specific adapters and is brittle; use sparingly.

- Time-delayed writes: Queue write requests; if the branch wins, flush the queue from the commit tab. Feasible for some APIs where idempotency keys can be used.

Implementing a basic write gate via CDP Fetch domain (lower-level than Puppeteer’s requestInterception):

javascriptasync function enableFetchGate(client, { speculative }) { await client.send('Fetch.enable', { patterns: [{ urlPattern: '*', requestStage: 'Request' }] }); client.on('Fetch.requestPaused', async (evt) => { const { requestId, request } = evt; const method = request.method; if (speculative && ['POST', 'PUT', 'PATCH', 'DELETE'].includes(method)) { await client.send('Fetch.failRequest', { requestId, errorReason: 'BlockedByClient' }); } else { await client.send('Fetch.continueRequest', { requestId }); } }); }

Branch prediction and scheduling

Speculation without prioritization is a cost sink. You need a scheduler that decides which branches to explore and for how long.

Reasonable choices:

- Heuristic scores: Domain-specific heuristics score a branch by proximity to target states (e.g., “cart page” detected, certain selector visible, form validated), content keywords, or model-provided confidence.

- Multi-armed bandits: Treat each branch as an arm with a reward estimate and cost. Use UCB1 or Thompson sampling to allocate steps/time proportional to promise.

- Beam search: Keep only the top-K branches by score at each depth; K is your parallelism budget.

- Value of information: Rank expansions by expected utility gain per unit cost, using model uncertainty as a proxy for information value.

A simple UCB-style scheduler stub:

pythonimport math class Branch: def __init__(self, id): self.id = id self.steps = 0 self.reward = 0.0 self.visits = 0 class UCBScheduler: def __init__(self, c=1.5): self.c = c self.t = 0 def select(self, branches): self.t += 1 def ucb(b): if b.visits == 0: return float('inf') return b.reward/b.visits + self.c * math.sqrt(math.log(self.t)/b.visits) return max(branches, key=ucb) def update(self, branch, reward): branch.visits += 1 branch.reward += reward

You can define reward as a weighted sum of signals: page classifier outputs, element presence, execution liveness (no errors), and distance-to-goal estimates.

Cost control and resource governance

Speculation must be bounded by budgets:

- Concurrency limit: Max active branches at once. Tune by CPU cores and memory. Headless Chrome can handle 5–10 light tabs on modest hardware; heavier sites may need fewer.

- Step/time budgets: Per-branch step caps and wall-clock limits. Aggressively prune slow or error-prone branches.

- Token/compute costs: If using an LLM in the loop (e.g., to parse DOM or plan actions), track per-branch token spend and stop branches that exceed ROI.

- Back-pressure triggers: Observe Performance.getMetrics and process memory; pause prefetch or reduce concurrency when thresholds are exceeded.

- Cache hygiene: Avoid cache thrashing by prefetching only what is likely to be used. Use HTTP cache-control headers as guidance.

Evaluation: how to know it’s working

Rigor matters. Evaluate speculation against a sequential baseline across a suite of tasks:

- Benchmarks:

- MiniWoB++ (browser automation micro-tasks): controlled environment to measure latency and success under ambiguity.

- Mind2Web (Zhao et al., 2023): open-domain web tasks annotated with goals; good for realistic navigation challenges.

- Site-specific regressions: your internal critical flows (search, checkout, dashboard filtering), where you can instrument accurate success criteria.

- Metrics:

- Task success rate and first-attempt success (no retries).

- End-to-end latency to success (p50/p90).

- Compute cost: CPU time, GPU time, memory high-water, LLM tokens.

- Speculation overhead: work wasted on discarded branches.

- Cache hit rate and bytes served from cache vs network.

- Side-effect violations: count of blocked writes and accidental writes (should be zero in strict mode).

- Ablations:

- Disable prefetch, then disable parallelism, then disable snapshots, to isolate impact.

- Vary number of branches (K) and budget per branch.

- Compare different branch predictors (heuristic vs UCB vs greedy).

Pitfalls and how to avoid them

- Overzealous prefetch: Fetching too aggressively can DDoS origins or waste bandwidth. Respect robots, rate limits, and only prefetch high-probability resources.

- False determinism: Overriding crypto/time may break apps that rely on these for integrity or security. Feature-flag determinism and never override in sensitive contexts (auth, payments).

- Cache non-sharing surprises: Separate BrowserContexts do not share HTTP cache. If you need isolation, accept the cache penalty or use an external caching proxy for shared benefit.

- Heisenbugs from event timing: networkidle2 is helpful but not perfect. Instrument idling with specific selectors or app-specific readiness events.

- POST-required flows: If the site requires POST to navigate, strict write-gating will stall. Use shadow identities or staging mirrors when possible; otherwise, defer exploration until commit.

- Memory ballooning: Many tabs with heavy pages will exhaust memory quickly. Close or freeze low-priority branches; CDP’s Target and Page domains let you detach or pause work. Consider Page.stopLoading and Runtime.discardConsoleEntries to reduce pressure.

An end-to-end flow, step-by-step

- Initialize the browser

- Launch Chrome with a persistent userDataDir to enable disk cache reuse across runs, if appropriate.

- Set consistent Emulation settings (timezone, locale, UA) for determinism.

- Establish the canonical boundary

- Navigate to the starting page (post-login, pre-task). Wait for a robust ready condition.

- Snapshot storage/cookies and record action log as empty.

- Spawn branches

- For each alternative action at the decision point, open a new tab in the same BrowserContext.

- Enforce determinism in each tab and enable the write gate.

- Rehydrate storage/cookies if the branch needs the same session state.

- Prefetch shared resources

- In any one branch (or a hidden helper tab), prefetch top-N candidate URLs and key assets.

- Instrument cache hit rate via Network.responseReceivedExtraInfo to confirm prefetch efficacy.

- Explore in parallel

- Each branch executes its candidate action(s). Keep an action log per branch with timestamps and selectors.

- After a small budget (e.g., 1–3 actions), score the branch.

- Cull or continue based on the scheduler’s decisions.

- Choose a winner

- Select the branch with the highest utility estimate under budget constraints. Persist its action log.

- Commit cleanly

- Open a fresh commit tab, restore the canonical session snapshot (cookies/storage), and disable write-gating.

- Replay the winning action log exactly. If replay diverges, fall back to an adaptive replanning loop in the commit tab.

- Rollback and clean up

- Close speculative tabs. Optionally clear analytics/tracking side-effects if recorded.

- Persist telemetry for evaluation and future scheduling policy learning.

Opinionated guidance on when speculation is worth it

- Use speculation when uncertainty at a branching point is high and branches are cheap to evaluate (e.g., clicking among top 3 search results). If each branch requires heavy form completion and server writes, speculation may not pay for itself.

- Invest early in prefetch quality. A few well-chosen prefetches can halve end-to-end time even with a single branch.

- Keep commit pristine. Do not try to “promote” a speculative tab into the committed one unless you are certain no writes occurred; you’ll avoid subtle state leaks by always re-initializing a commit tab.

- Start with strict write-gating. Later, add module-specific adapters for necessary POST flows on high-value domains under your control.

A more complete CDP example with branch sessions and selective snapshotting

javascriptconst CDP = require('chrome-remote-interface'); async function createPage(client, url, { speculative = true } = {}) { const { Target } = client; const { targetId } = await Target.createTarget({ url: 'about:blank' }); const { sessionId } = await Target.attachToTarget({ targetId, flatten: true }); const session = client.session(sessionId); const { Page, Network, Fetch, Emulation, Runtime } = session; await Promise.all([Page.enable(), Network.enable(), Fetch.enable({ patterns: [{ urlPattern: '*', requestStage: 'Request' }] })]); // Determinism await Emulation.setTimezoneOverride({ timezoneId: 'UTC' }); await Emulation.setLocaleOverride({ locale: 'en-US' }); await Runtime.evaluate({ expression: 'Math.random = (()=>{let s=42;return ()=>((s^=s<<13,s^=s>>>17,s^=s<<5)>>>0)/0xFFFFFFFF;})();' }); // Write-gate Fetch.requestPaused(async ({ requestId, request }) => { if (speculative && ['POST','PUT','PATCH','DELETE'].includes(request.method)) { await Fetch.failRequest({ requestId, errorReason: 'BlockedByClient' }); } else { await Fetch.continueRequest({ requestId }); } }); await Page.navigate({ url }); await Page.loadEventFired(); return { session, sessionId, targetId }; } (async function() { const client = await CDP(); const startUrl = 'https://example.com/search?q=widgets'; const branchA = await createPage(client, startUrl, { speculative: true }); const branchB = await createPage(client, startUrl, { speculative: true }); // Branch exploration actions would go here. // Score, select winner, then create a fresh commit page without write-gate. await client.close(); })();

Security and compliance considerations

- Respect sites’ terms and robots: Prefetching and automated access must be rate-limited and within acceptable use.

- Do not override cryptographic primitives on pages where security matters. Keep determinism opt-in per domain.

- Treat snapshots as sensitive: Cookies, storage, and DOM snapshots may contain PII. Encrypt at rest, restrict access, and scrub when not needed.

Where to go next

- Learn-from-experience scheduling: Persist per-domain branching outcomes and embed them into predictors. Over time, your agent learns which branches typically lead to success.

- Site adapters for high-value flows: For a handful of critical sites, implement staging rewrites and POST simulations to get speculative benefits even for write-heavy flows.

- Redo orchestration in a shared worker: Centralize prefetch logic and cache instrumentation in a helper tab/worklet to reduce duplication.

- Integrate with a local HTTP cache proxy: Tools like mitmproxy (with explicit consent and legal checks) can provide cross-context caching and precise request control, at the cost of extra complexity.

Conclusion

Speculative execution for browser agents is not just a neat trick; it’s a principled way to trade parallelism for latency and robustness under uncertainty. With CDP, you can assemble the necessary pieces today: multi-tab branching with shared cache, deterministic snapshots at navigation boundaries, write-gating to avoid side effects, and a scheduler that spends effort where it’s most likely to pay off. The result is an agent that feels faster and fails less often—and crucially, one whose costs remain under control because you steer speculation with budgets and data.

If you adopt one idea from this piece, make it this: treat commit as a clean replay from a known good state. That single constraint simplifies rollback, makes results reproducible, and lets you scale speculation safely from a modest beam of two branches to a fleet of parallel plans when the payoff warrants it.