On‑Device LLMs for Agentic Browsers: WebGPU Inference, Quantization, Paged KV Cache, and Privacy‑First Fallback Pipelines

Agentic browsers are moving from mere renderers to autonomous clients that can read, plan, navigate, and act on the web, often while handling sensitive user data. Shipping the model to the user and running inference on-device dramatically narrows the privacy surface while reducing latency. Thanks to WebGPU, WASM, and careful engineering around quantization and memory, 3–7B parameter models are becoming viable in the browser today, with 13B feasible on higher-end GPUs.

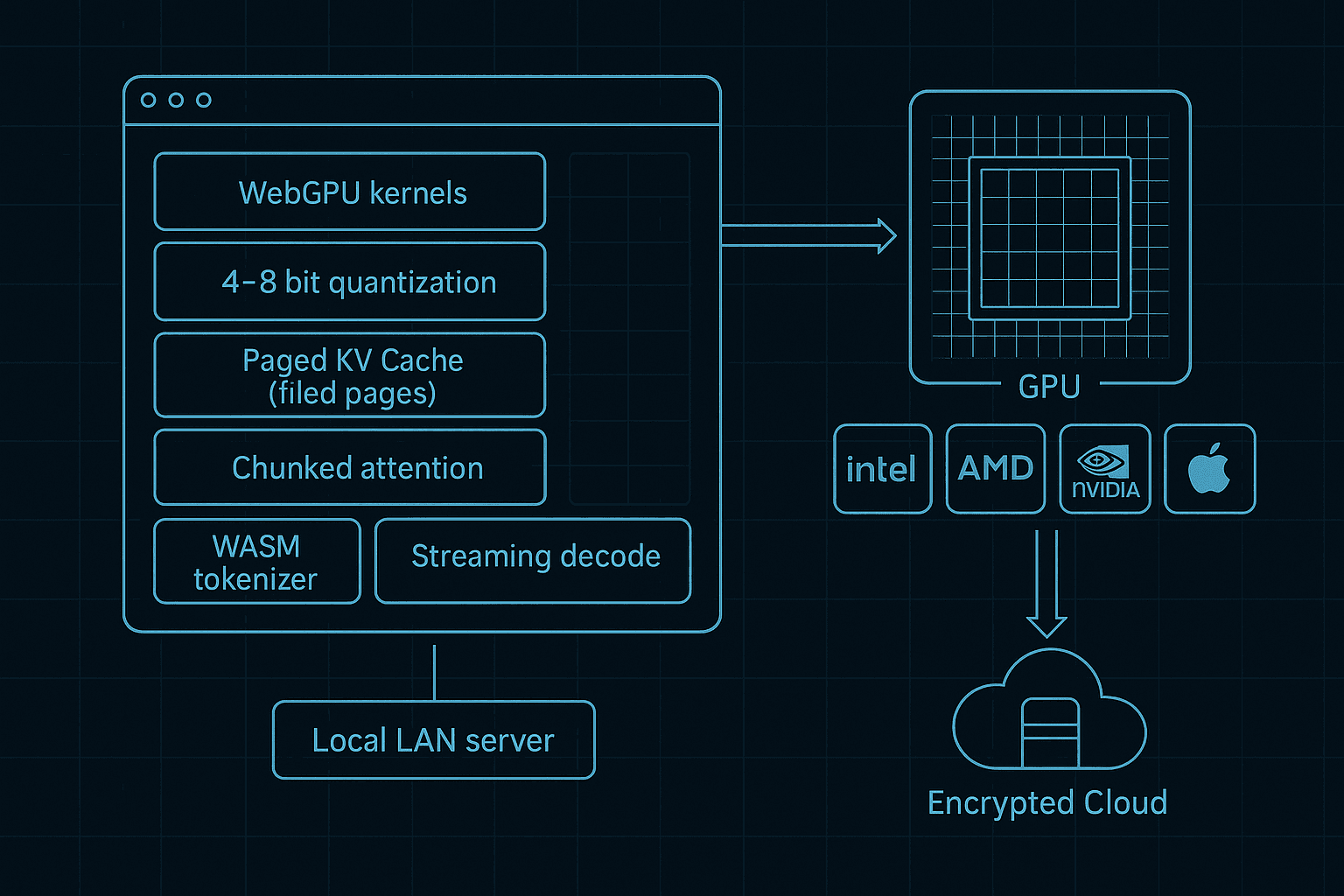

This article provides a hands-on blueprint for building a privacy-first, on-device LLM stack for agentic browsing. We will cover:

- WebGPU inference kernels (FP16, int8/int4 dequant-on-the-fly) and what is practical in browsers today.

- Weight-only 4–8 bit quantization strategies that preserve quality.

- Paged KV cache and chunked attention to scale context without blowing VRAM.

- A fast WASM tokenizer and worker architecture that does not block the UI thread.

- VRAM budgeting, streaming decode, and first-token latency tuning.

- A tiered fallback pipeline (device → LAN server → cloud) with privacy safeguards.

- Cross‑GPU CI and correctness testing across Intel/AMD/NVIDIA/Apple devices and major browsers.

The guidance is opinionated: choose small models, invest in cache management, measure everything, and prefer predictable memory formats over exotic tricks.

1) What is actually feasible on-device in 2026?

- 3B models: Smooth on midrange integrated GPUs (newer Intel/Apple) and mid-tier discrete GPUs. Excellent privacy/latency trade-off for agent tasks that require quick decisions.

- 7B models: Practical on desktop-class GPUs (RTX 3060 12 GB, RX 6800 16 GB, Apple M2/M3 with 16–24 GB unified memory). Requires 4–8 bit weight quantization and an efficient KV cache.

- 13B models: Feasible on high-end consumer GPUs (RTX 4080/4090, RX 7900) with 4-bit weights and careful KV compression, plus chunked attention for long contexts.

For agentic browsing, response quality is a function of model size, prompt design, retrieval grounding, and tool use. A solid 3–7B with effective tools and planning often beats a 13B without them. Browser agents benefit from improved grounding (DOM snapshotting, local page embeddings, small retrieval modules) more than from raw parameter counts.

2) WebGPU inference fundamentals (and limits that matter)

Key practical points for WebGPU in production:

- FP16 is essential. Request the 'shader-f16' feature for reducing bandwidth and increasing arithmetic throughput.

- Tiled compute is your friend. You will rely on classic matmul tiling with workgroup shared memory. Subgroups and cooperative matrix ops are emerging; use them opportunistically, but maintain a portable baseline.

- Keep your graph simple. WebGPU does not yet expose a full graph-execution abstraction; minimize command encoder churn, reuse pipelines, and amortize pipeline compilation costs.

- Beware adapter limits. Query device.limits for maxStorageBufferBindingSize, minStorageBufferOffsetAlignment, and maxComputeWorkgroupStorageSize. Pack your pages and buffers to alignment boundaries.

- Timestamp queries exist; use them to identify hotspots and regressions during CI.

A baseline inference pipeline:

- Preload quantized weights into GPU buffers (possibly streamed and transposed offline).

- For each decode step, run attention and MLP blocks layer by layer, writing to KV pages and intermediate buffers.

- Perform logits projection, sample on CPU, and proceed to next token.

3) Quantization: 8-bit and 4-bit weight-only schemes that survive the browser

Browser constraints favor weight-only quantization with on-the-fly dequantization inside matmul kernels. Two robust families:

- 8-bit per-channel (symmetric) with FP16 activations. Easy, stable, good for low-end GPUs. Typically <1–2% perplexity hit.

- 4-bit group-wise (e.g., per 64 or 128 input channels) with asymmetric scales. Supported by AWQ/GPTQ-like approaches; preserve critical channels and avoid over-quantizing outliers. Expect ~2–5% perplexity hit if tuned; minimal quality drop on instruction-tuned 3–7B models for agentic tasks.

Offline processing pipeline (opinionated):

- Start from FP16 safetensors.

- Run AWQ or GPTQ offline, pack into a transport format you control (or GGUF if you prefer established tooling). Precompute per-group scales and zero points in FP16.

- Transpose weight matrices into the layout your kernels prefer (usually column-major for W^T·x style) to make memory coalesced.

- Pack 4-bit weights into u32 words as 8 nibbles per word. Keep scales/zeros in contiguous FP16 arrays.

A simple fused dequant-matmul WGSL kernel sketch for int4 weights (illustrative, not fully optimized):

wgslenable f16; struct MatDims { M : u32, N : u32, K : u32, group_size : u32, }; @group(0) @binding(0) var<storage, read> A: array<f16>; // [M, K] @group(0) @binding(1) var<storage, read> Wq: array<u32>; // packed int4, [K, N/8] @group(0) @binding(2) var<storage, read> Scales: array<f16>; // [N * (K/group_size)] or [N] if per-channel @group(0) @binding(3) var<storage, read_write> C: array<f16>; // [M, N] @group(0) @binding(4) var<uniform> D: MatDims; fn get_nibble(word: u32, idx: u32) -> u32 { // idx in [0,7] return (word >> (idx * 4u)) & 0xFu; } @compute @workgroup_size(8, 8, 1) fn main(@builtin(global_invocation_id) gid: vec3<u32>) { let m = gid.y; let n = gid.x; if (m >= D.M || n >= D.N) { return; } var acc: f16 = f16(0.0); var k: u32 = 0u; var group_idx: u32 = 0u; var scale: f16 = f16(1.0); while (k < D.K) { if ((k % D.group_size) == 0u) { group_idx = (k / D.group_size) * D.N + n; scale = Scales[group_idx]; } // Load 8 packed int4 weights for columns [n], advance by 8 in K. let col_pack_base = (k / 8u) * (D.N / 8u) + (n / 8u); let wpack = Wq[col_pack_base]; // Consume 8 nibbles and 8 activations (A[m, k..k+8]) for (var i: u32 = 0u; i < 8u; i = i + 1u) { if (k + i >= D.K) { break; } let q = get_nibble(wpack, n % 8u); // simplified example; normally pack across K // map 4-bit [0..15] to signed [-8..7] let q_signed = i32(q); // reinterpretation let q_centered = f16(f32(q_signed) - 8.0); let a_val = A[m * D.K + (k + i)]; acc = acc + (a_val * (q_centered * scale)); } k = k + 8u; } C[m * D.N + n] = acc; }

Notes:

- Real kernels tile M and N into workgroup tiles (e.g., 64×64), stage A tiles into workgroup memory, and pack Wq to match how subgroups load data. The snippet shows the dequantization-in-the-loop idea, not the production layout.

- For 8-bit, replace nibble extraction with byte extraction and adjust scaling.

- Keep scales/zeros in FP16 and prefer per-channel or per-group scales for predictable quality.

4) Tokenization in WASM without blocking the UI

LLM tokenization can dominate first-token latency if implemented poorly in JS. Best practice:

- Use a WASM port of your tokenizer (SentencePiece, tiktoken, or Hugging Face tokenizers). Load once, reuse across tabs via a SharedWorker when possible.

- Run tokenization inside a dedicated Web Worker. Avoid copying strings repeatedly; reuse ArrayBuffers and slice views.

- Stream tokenization for long inputs: produce tokens in batches to feed the decode loop sooner.

Minimal worker sketch (message passing):

js// tokenizer-worker.js let tok; // WASM tokenizer instance self.onmessage = async (e) => { const { type, payload } = e.data; if (type === 'init') { // payload: WASM binary URL, vocab, merges, etc. tok = await initTokenizer(payload); postMessage({ type: 'ready' }); } else if (type === 'encode') { const ids = tok.encode(payload.text, { addBos: true }); postMessage({ type: 'encoded', ids }, [ids.buffer]); } else if (type === 'decode') { const text = tok.decode(payload.ids); postMessage({ type: 'decoded', text }); } };

On the main thread:

jsconst worker = new Worker('tokenizer-worker.js', { type: 'module' }); await new Promise((resolve) => { worker.onmessage = (e) => { if (e.data.type === 'ready') resolve(); }; worker.postMessage({ type: 'init', payload: { /* wasm+model urls */ } }); }); function encode(text) { return new Promise((resolve) => { worker.onmessage = (e) => { if (e.data.type === 'encoded') resolve(new Uint32Array(e.data.ids)); }; worker.postMessage({ type: 'encode', payload: { text } }); }); }

Keep tokenization off the UI thread to ensure DOM interactions remain fluid during agent tasks.

5) KV cache engineering: paged, aligned, and eviction-aware

Self-attention scales with sequence length and the memory cost of storing keys and values for each layer. A naive KV cache allocates a gigantic [layers × heads × seq_len × head_dim × 2 × dtype] tensor. That will not survive in a browser.

Paged KV cache is the remedy: store KV tensors in fixed-size pages, reuse freed pages from finished sequences, and avoid reallocating massive contiguous buffers. This mirrors vLLM’s design but adapted to WebGPU constraints.

Memory math per token (approximate):

- Per token KV bytes ≈ 2 × hidden_dim × layers × bytes_per_element

- Example (7B; hidden_dim=4096, layers=32):

- FP16 KV: 2 × 4096 × 32 × 2 bytes ≈ 524,288 bytes ≈ 0.5 MB/token

- 8-bit KV: halve that to ≈ 0.25 MB/token (quality impact depends on quantization strategy)

- 13B (hidden_dim=5120, layers=40): FP16 KV ≈ 0.78 MB/token

Paged cache design for WebGPU:

- Choose a page size in tokens, e.g., 16 or 32 tokens per page, to balance internal fragmentation and binding overhead.

- Allocate a single large GPUBuffer for K and one for V per layer (or a single interleaved buffer), subdivided into fixed-size page slots aligned to device.limits.minStorageBufferOffsetAlignment.

- Maintain a free-list of page indices and a map from (sequence_id, block_index) to page index.

- Store per-sequence metadata (logical positions, past length, attention mask type) in a small CPU array mirrored to a uniform buffer each step.

Pseudocode for page manager:

tsclass KVPageAllocator { constructor(device, layers, heads, headDim, pageTokens, dtypeBytes) { this.pageBytes = heads * headDim * pageTokens * dtypeBytes; this.pageBytesAligned = align(this.pageBytes, device.limits.minStorageBufferOffsetAlignment); this.totalPages = /* compute from VRAM budget */; this.free = new Uint32Array(this.totalPages); // allocate buffers per layer for K and V this.bufK = new Array(layers); this.bufV = new Array(layers); for (let l = 0; l < layers; l++) { this.bufK[l] = device.createBuffer({ size: this.totalPages * this.pageBytesAligned, usage: GPUBufferUsage.STORAGE }); this.bufV[l] = device.createBuffer({ size: this.totalPages * this.pageBytesAligned, usage: GPUBufferUsage.STORAGE }); } } allocPage() { /* pop from free list */ } freePage(idx) { /* push to free list */ } pageOffset(idx) { return BigInt(idx) * BigInt(this.pageBytesAligned); } }

Binding with dynamic offsets:

- WebGPU supports dynamic offsets for storage buffers. Create a bind group layout with storage buffers that specify dynamic offsets. At dispatch, specify offsets returned by pageOffset(idx).

- Keep a small staging uniform that tells the shader how many valid tokens exist in the last page, to handle partial pages.

Eviction and reuse:

- When a sequence finishes or truncates, return its pages to the free list.

- To support very long contexts, implement LRU across long-running sequences and fall back to chunked attention for older tokens.

6) Chunked attention (aka blockwise or streaming attention)

Chunked attention reduces peak memory use by processing attention in fixed-size windows and accumulating results. It is compatible with paged caches:

- Partition the K/V history into blocks of e.g. 256 tokens.

- For each new query block (1 or a few tokens during decoding), iterate through past blocks, compute partial attention scores, apply numerically stable softmax with running max and sum, and accumulate outputs.

- Keep only the current chunk in fast workgroup memory; stream past chunks from storage.

Advantages:

- Reduces pressure on workgroup memory and lessens worst-case latency spikes.

- Makes long contexts feasible on smaller VRAM, at the cost of more kernel launches.

Implementation hints:

- Use per-chunk scaling to avoid softmax underflow: maintain running max m and sum s, update with chunk maxima; rescale the accumulator on each update.

- Pay attention to alignment: chunk sizes should map cleanly to page sizes to avoid partial-page overheads.

7) VRAM budgets: pick models and contexts that fit

Rough VRAM budget formula:

- Weights (quantized):

- 7B at 4-bit: ~3.5–4.0 GB (plus scales/metadata ≈ +10–15%)

- 7B at 8-bit: ~7.0–8.0 GB

- 3B at 4-bit: ~1.5–1.8 GB

- KV cache (per token): see earlier math. Multiply by target concurrent contexts and expected max sequence length.

- Activations & scratch: 0.5–1.5 GB depending on tiling and temporary buffers.

Example targets:

- Apple M2 16 GB unified: 7B Q4 with 2–4K context, single active session, chunked attention for longer.

- RTX 3060 12 GB: 7B Q4 at 2–3K tokens; 3B Q4 at 8K with chunking and 8-bit KV.

- RTX 4090 24 GB: 13B Q4 at 8K; 7B Q4 at 16K with chunked attention and careful KV quantization.

Practical advice:

- Start with 3B Q4 to validate kernels and UX; move to 7B only if quality requires it.

- Default KV to FP16 for safety; consider 8-bit KV only after measuring answer quality on your tasks.

- Treat long-context support as a feature gated by chunked attention and eviction policies, not as a default unlimited slider.

8) Streaming decode and first-token latency

The decode loop emits one token at a time; every microsecond of overhead matters. Focus on:

- Pipeline warmup: create all compute pipelines at load time, dispatch tiny dummy workloads to JIT and cache pipelines before the first real token.

- Command encoder reuse: record predictable sequences of passes; avoid creating encoders and bind groups in hot loops when possible.

- Staging buffers: reuse upload buffers; batch small uniform updates.

- Overlap CPU and GPU: while the GPU computes layer L, the CPU can update next-step metadata and run sampling.

A minimal decode loop outline:

tsasync function decodeLoop(initIds, maxNewTokens) { let ids = initIds; for (let t = 0; t < maxNewTokens; t++) { // 1) Embed last token(s) // 2) For each layer: attention + MLP, reading/writing KV pages for (let l = 0; l < numLayers; l++) { // set bind groups with dynamic offsets for this sequence's KV pages // dispatch attention kernel for layer l // dispatch MLP kernels } // 3) Logits projection // 4) Read back small logits slice or full vocab (prefer top-k from GPU if implemented) const nextId = sampleFromLogitsGPUorCPU(); ids.push(nextId); // 5) Stream back partial text on a timer budget if ((t % streamEvery) === 0) streamToUI(ids); if (nextId === eosId) break; } return ids; }

First-token latency checklist:

- Tokenization in worker, not main thread.

- All pipelines created and warmed up.

- Weights resident in GPU memory; no per-step uploads.

- KV buffers preallocated and pages available.

- Avoid JS GC storms: reuse typed arrays, avoid transient objects inside the hot loop.

9) Privacy-first fallback: device → LAN → cloud

On-device is the default. When the device cannot meet model/context demands (memory or latency), fall back gracefully:

Tier 1: On-device (WebGPU + WASM)

- Best privacy. No data leaves the device.

- Select smallest model that meets the task quality requirements. Allow the user to set a strict VRAM cap.

Tier 2: Local LAN server (optional)

- Companion process on a local machine (desktop/home server) reachable via mDNS or known IP.

- Use HTTPS with self-signed cert pinned by fingerprint; pair devices via QR code.

- Enables larger models and long contexts with minimal privacy trade-offs (data stays in the LAN).

Tier 3: Privacy-preserving cloud

- Only used with explicit consent or when the user opts into a higher-quality model.

- Encrypt prompts/responses end-to-end in the browser using ephemeral public key cryptography (e.g., HPKE or Noise). The server processes decrypted data in a short-lived enclave or applies strict data retention policy.

- Hard caps: zero logging of content, no training on user data, scrub after job completion.

- Transparency: live view of routing choice, costs, and model used; exportable audit trail of the last N runs.

Routing policy example:

- Attempt device inference; if memory error or estimated latency > user threshold, try LAN.

- If LAN unavailable or still slow, prompt user to allow cloud. Respect a per-domain policy (e.g., never send content from banking sites off-device).

- Cache routing decisions per site/task and surface a one-click override.

10) Cross‑GPU CI and correctness testing

A browser inference stack must run across GPU vendors and browsers. Invest in CI:

Matrix:

- Browsers: Chrome/Edge (stable/canary), Firefox (nightly), Safari (Tech Preview on macOS).

- GPUs: Intel Arc (Windows/Linux), AMD RDNA (Windows/Linux), NVIDIA RTX (Windows/Linux), Apple Silicon (macOS). Use self-hosted runners for GPUs; cloud runners rarely expose WebGPU with discrete GPUs reliably.

- OSes: Windows 11, Ubuntu LTS, macOS Sonoma.

Tests:

- Feature probes: FP16 support, maxStorageBufferBindingSize, dynamic offsets.

- Microbenchmarks: dense matmul, attention chunk, dequant kernels; produce perf baselines and flag regressions.

- Golden correctness: run a fixed prompt with deterministic sampling (temperature=0, fixed seed), compare logits or token outputs within tolerance.

- Memory guards: attempt to allocate near the VRAM budget and ensure graceful failure with fallback.

Automation hints:

- Use WebDriver to launch browsers headless and run a harness page that exposes test results via console logs or WebSocket.

- Capture GPU timestamps and JS timings; store in CI artifacts for trend graphs.

- Run nightly on each GPU host; tie alerts to significant perf regressions (>10%) or correctness diffs.

11) Example: a minimal WebGPU linear layer with 8-bit weights

While production kernels will be fused and tiled, a simple example illustrates buffer layout and dispatch. Here we do A[M×K] × Wq[K×N] → C[M×N] with per-channel scales.

WGSL kernel for uint8 weights:

wgslenable f16; struct Dims { M:u32; N:u32; K:u32; }; @group(0) @binding(0) var<storage, read> A: array<f16>; // [M*K] @group(0) @binding(1) var<storage, read> Wq: array<u32>; // packed u8 (4 per u32) @group(0) @binding(2) var<storage, read> Sc: array<f16>; // [N] @group(0) @binding(3) var<storage, read_write> C: array<f16>;// [M*N] @group(0) @binding(4) var<uniform> D: Dims; fn get_byte(word:u32, idx:u32) -> u32 { return (word >> (idx*8u)) & 0xFFu; } @compute @workgroup_size(16, 16) fn main(@builtin(global_invocation_id) gid: vec3<u32>) { let m = gid.y; let n = gid.x; if (m >= D.M || n >= D.N) { return; } var acc: f16 = f16(0.0); let scale = Sc[n]; for (var k:u32 = 0u; k < D.K; k = k + 4u) { let wIdx = (k/4u)*D.N + n; // 4 weights per u32 let packed = Wq[wIdx]; for (var i:u32=0u; i<4u; i=i+1u) { if (k+i >= D.K) { break; } let w = f16(f32(get_byte(packed, i)) - 128.0) * scale; // symmetric centered let a = A[m*D.K + (k+i)]; acc = acc + a*w; } } C[m*D.N + n] = acc; }

JS setup snippet:

jsconst adapter = await navigator.gpu.requestAdapter({ powerPreference: 'high-performance' }); const device = await adapter.requestDevice({ requiredFeatures: ['shader-f16'] }); function createBufferF16(device, arr) { const buf = device.createBuffer({ size: arr.byteLength, usage: GPUBufferUsage.STORAGE | GPUBufferUsage.COPY_DST }); device.queue.writeBuffer(buf, 0, arr.buffer); return buf; } // Assume A_f16, Wq_u32, Sc_f16 are TypedArrays (Uint16Array for f16 bit patterns) const bufA = createBufferF16(device, A_f16); const bufW = device.createBuffer({ size: Wq_u32.byteLength, usage: GPUBufferUsage.STORAGE | GPUBufferUsage.COPY_DST }); device.queue.writeBuffer(bufW, 0, Wq_u32.buffer); const bufS = createBufferF16(device, Sc_f16); const bufC = device.createBuffer({ size: M*N*2, usage: GPUBufferUsage.STORAGE | GPUBufferUsage.COPY_SRC | GPUBufferUsage.COPY_DST }); const dims = new Uint32Array([M, N, K]); const bufD = device.createBuffer({ size: 12, usage: GPUBufferUsage.UNIFORM | GPUBufferUsage.COPY_DST }); device.queue.writeBuffer(bufD, 0, dims.buffer); const module = device.createShaderModule({ code: wgslSource }); const pipeline = device.createComputePipeline({ layout: 'auto', compute: { module, entryPoint: 'main' } }); const bind = device.createBindGroup({ layout: pipeline.getBindGroupLayout(0), entries: [ { binding: 0, resource: { buffer: bufA } }, { binding: 1, resource: { buffer: bufW } }, { binding: 2, resource: { buffer: bufS } }, { binding: 3, resource: { buffer: bufC } }, { binding: 4, resource: { buffer: bufD } }, ]}); const encoder = device.createCommandEncoder(); const pass = encoder.beginComputePass(); pass.setPipeline(pipeline); pass.setBindGroup(0, bind); pass.dispatchWorkgroups(Math.ceil(N/16), Math.ceil(M/16)); pass.end(); device.queue.submit([encoder.finish()]);

This is intentionally simple: production code tiles, fuses, and reduces readbacks by sampling on the GPU and only returning the chosen token id to the CPU.

12) Sampling and on-GPU top-k

To avoid copying full-vocab logits (tens of thousands) to the CPU each step, implement on-GPU top-k and return only k candidates:

- Use a segmented top-k on the GPU output vector. For small k (e.g., 20–50), a single pass with a small heap per threadgroup suffices.

- Apply temperature and repetition penalties on the GPU if possible; otherwise, fetch only top-k logits and finalize sampling on CPU.

- Determinism: fix RNG seed per session to enable reproducible CI tests.

13) A practical agent stack architecture

- Frontend:

- React/Svelte UI for text stream and action panel.

- Web Worker for tokenization and for orchestration; main thread handles DOM.

- WebGPU for model inference; keep device state in a singleton per tab.

- Storage:

- IndexedDB for weights caching, composite files (GGUF or custom), versioned by model hash and browser build.

- Persist routing preferences and audit summaries.

- Tools:

- DOM reader to snapshot relevant nodes as text; small local embedding model (WASM) to rank snippets.

- Browser Actions: click, type, navigate; gated by user confirmation, with replay controls.

- Fallback client:

- gRPC-Web or HTTP/2 to LAN/cloud endpoints, HPKE-encrypted payloads, signed responses with model id and usage.

14) Opinionated guidance and pitfalls

- Prefer weight-only 4-bit for 7B and below, but keep KV in FP16 unless benchmarks show negligible quality loss. 8-bit KV is usually okay for instruction-tuned models; 4-bit KV often harms generation coherence.

- Invest in a robust paged KV cache early. It unlocks batching and prevents OOM flakiness.

- Pretranspose weights offline; do not transpose in the browser.

- Keep your kernels boring and predictable; wins from extreme packing tricks can be wiped out by driver variability across vendors.

- Always surface a user-facing privacy indicator showing whether inference is on-device, LAN, or cloud.

- Do not fight the GC: pool typed arrays, recycle bind groups and encoders, and pre-allocate structures for the hot path.

15) Monitoring, metrics, and guardrails

- Latency breakdown: tokenization, first-layer attention, MLP, logits projection, sampling.

- GPU timestamps: mark begin/end of each major kernel; store histograms to detect regressions.

- Memory watermarks: track allocated pages, free list depth, and soft limits.

- Quality probes: nightly prompts with expected outputs; alert on drift.

- User controls: max VRAM, max tokens, strict privacy mode (device-only), and a panic button to abort runs.

16) Roadmap: where things are going

- Subgroups and cooperative matrix ops in WebGPU will narrow the gap to native TensorCores/Matrix Cores. Expect 1.3–2× speedups without changing model architectures.

- WebNN compute graph APIs may eventually let you predefine graphs; until then, optimize command streams and caching.

- Better browser caching and streaming fetch APIs will reduce cold-start time for large weight files.

- Model-side: small specialized models (planner, summarizer, DOM-grounded reader) combined via routing often beat a single monolith for agent tasks in the browser.

17) Putting it together: a minimal build checklist

- Choose a 3B or 7B base, quantize weights offline to 4-bit with per-group scales.

- Convert to a transport format with pretransposed matrices and aligned pages.

- Implement FP16 tiled matmul with int4 dequant-on-the-fly; add attention and MLP blocks.

- Build a paged KV cache with aligned pages and dynamic offsets.

- Add chunked attention for long contexts; verify numerical stability of softmax accumulation.

- Tokenizer in WASM Worker; incremental encode/decode.

- Streaming decode with warmed pipelines; on-GPU top-k.

- VRAM budgeter that picks model/context automatically and triggers fallback when necessary.

- Tiered fallback with cryptographic protections and transparent UI.

- CI across GPU vendors and browsers with golden correctness tests and perf baselines.

Conclusion

On-device LLM inference in the browser has crossed the threshold of practicality for agentic tasks. With WebGPU kernels tuned for FP16 and quantized weights, a paged KV cache, chunked attention, and a well-designed streaming decode loop, you can deliver private, low-latency experiences that handle most everyday automation without leaving the device. A tiered fallback path rounds out the system for heavy requests, and cross‑vendor CI keeps regressions at bay.

Keep models modest, measure everything, and make privacy the default. The result is an agentic browser that feels fast, respects user data, and runs anywhere from integrated GPUs to high-end desktops.